Making Pigs Fly (AKA Getting the Verifier To Approve eBPF Code)

Your eBPF code may work on your system, but the verifier won't let it work anywhere else.

Where we started

We originally developed on Ubuntu 24.04 on an arm64 chipset (because I develop on MacOS and run my eBPF code in a VM). We were sure our eBPF code worked on a 6.8 kernel running on an arm64 system.

We knew in theory that our code should run on all kernel versions >= 5.18. We knew our code only worked on these kernel versions, since we used bpf_loop.

bpf_loop is a helper function we use in our eBPF code that allows us to set higher loop limits, enabling us to accurately read filenames. Without bpf_loop, we would be restricted to reading the first 256 characters of a filename, which isn’t that good.

Newer Kernels Can Break eBPF

But practice is quite different from theory. When we tried to run our code on a 6.18 kernel, our eBPF code failed to pass the verifier, and we were stumped. Our eBPF code used CO-RE helpers, so why wasn’t it passing the verifier in this new kernel version?

We realized that it wasn’t that our code would not work on newer kernels. Instead, it was the verifier itself that improved, and because of that, the new improved verifier did not approve one of our LSM hooks on bprm_check_security.

What happened was that our return value in this specific hook could not be verified by the verifier after the kernel dynamically optimized our eBPF code. To fix this issue, we used barrier_var to prevent the kernel from optimizing our return value, allowing the verifier to ensure it was safe.

Older Kernels Can Break Stuff Too!

Once we did that, we also ran our code on the lowest kernel version we supported, 5.18, and it didn’t work either! This was the same problem we had with running on 6.18, but in reverse.

The kernel was older than the one we normally ran, so some optimizations that happened on newer kernels wouldn’t apply to these older ones.

In our case, we had to fix a couple of things.

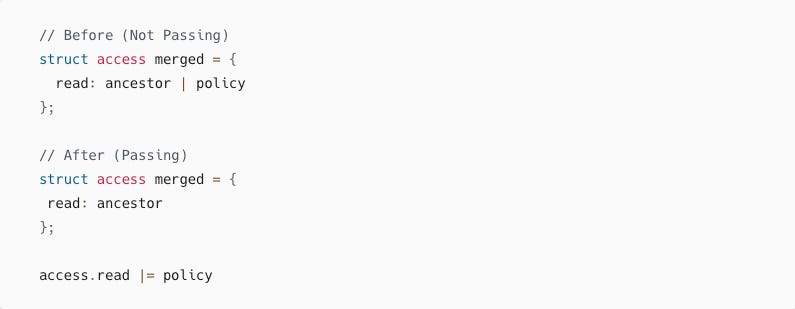

We were computing a bitwise operation between two elements and directly assigning the result to a variable; instead, we had to split up the operations, first write to the variable, and then compute our bitwise operations

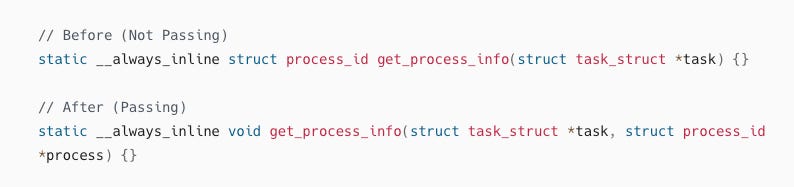

We were returning values from inline helper functions, which couldn’t be verified. But when we changed from returning values directly to passing a buffer to the inline helpers and having them write their values to the buffer instead of returning them, the verifier approved it.

Chipsets also break things

It turns out our eBPF code didn’t work on x86 either! Now I am pretty sure the reason for this is that x86 has a better verifier and may also optimize our eBPF code better.

Now I don’t have an example of what happened in our eBPF code, nor do I know how we fixed it, since I can’t locate the commit that did. Needless to say, it was quite irritating to fix.

Fixing verifier messages

Now these problems aren’t exactly easy to diagnose. In our codebase, since most of our code was in inline helper functions, the errors would just point to the failing hook and the register that was the problem, which are quite opaque on the surface.

These errors, at least for me, are quite hard to understand, so I would say the best way to understand them is to look them up, find out what the registers mean, and how the eBPF verifier behaves in different scenarios. Many times, you can’t find an example online, so to write good eBPF programs, you will have to learn what these errors mean, but in the past couple of years, debugging verifier errors has become a whole lot easier because of LLMs.

While I still don’t think LLMs can write good eBPF code (It may be able to write somthing that complies, but once you read it, you will be horrified by what it writes), LLMs are surprisingly good at actually tracking where the errors are coming from.

Now LLMs are quite bad at fixing these errors, and there is also a good chance that they totally hallucinate what the real problem is, but if you can get them to help you understand which register correlates to what variable, and what the error message could mean in your context (This works a whole lot better, if the LLM has a websearch option) debugging these errors becomes a whole lot easier.

Testing on multiple kernels

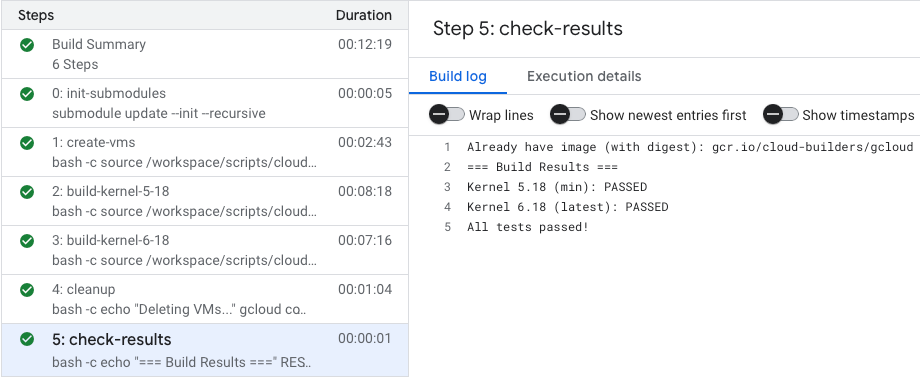

After we realized all of this, we set up some automated testing jobs to run our code on the latest and earliest kernels we supported. We did this by running our own self-hosted runners (not github runners) with 5.18 and the latest stable kernel (6.18). We do this by deploying two custom images, made with Packer.

We don’t use GitHub runners because LSM cannot be enabled on them, and our eBPF code is primarily built on LSM hooks. Github runners also come with a lot of bloat and a lot of vulnerabilities, so running our own VMs made more sense to us, for security’s sake https://substack.bomfather.dev/p/githubs-ubuntu-latest-runners-have.